Results

In this project various boosting models and artificial neural networks have been tested on simulated data to determine which will be better suited to the upgraded system at ALICE.

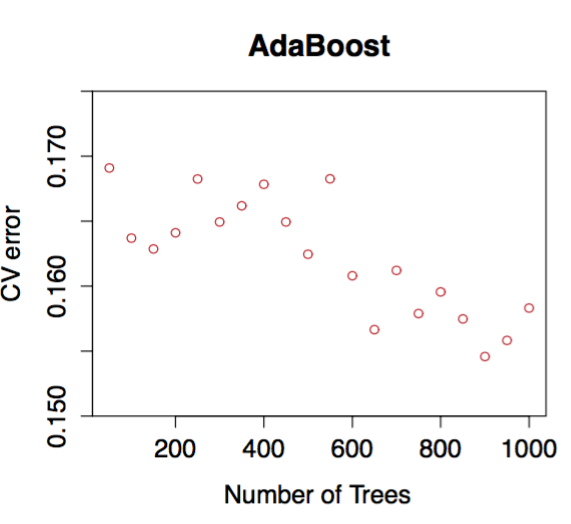

From the set of boosting algorithms tested it was found that ε-LogitBoost was the worst performing. ε-Boost performed better than Adaboost on complex weak learners but worse than AdaBoost on simple weak learners. Since using simple weak learners generalises better AdaBoost was determined to be the best performing boosting algorithm.

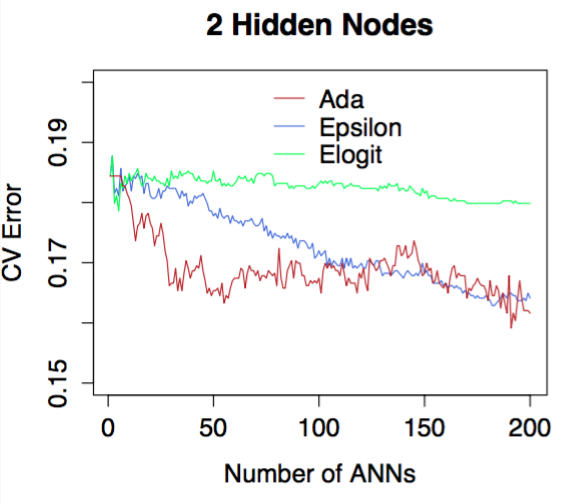

Using artificial neural networks with two or three nodes as weak learners performed better than decision trees. These small artificial neural networks learnt slower and continued to improve performance for longer than the other weak learners tested.

As a result the best performing boosting algorithm was determined to be AdaBoost using artificial neural networks with two nodes in the hidden layer.

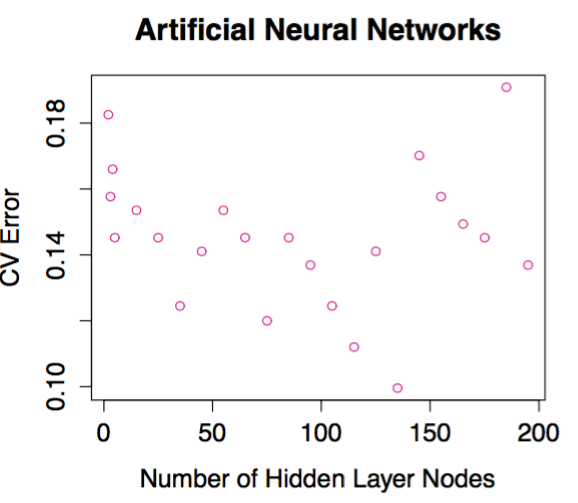

Artificial neural networks using the sigmoid activation function learnt the data better than the fast-sigmoid activation function and over fitted the data less than the tanh activation function. artificial neural networks with a single hidden layer performed best. As a result it was determined that artificial neural networks with a single hidden layer using the sigmoid activation function was the best performing artificial neural network.

The best performing artificial neural network - single hidden layer and sigmoid activation function - was compared to the best performing boosting algorithm - AdaBoost using artificial neural networks with two nodes in the hidden layer. The analysis shows that the artificial neural network was more accurate and computationally more efficient in terms of training and classifying.